Responsible AI

Key Takeaway:

In 2024 and onwards, there will be a significant shift towards skills-based hiring and flexible work arrangements propelled by AI integration. This evolution redefines traditional life stages and employment norms, emphasizing personal well-being and efficiency. As AI reshapes roles, it offers challenges and opportunities, necessitating a balanced approach to leverage its potential for productivity and innovation across various industries.

Trend Type: Social & Business

Sub-trends: Life Deconstruction, Working for Balance, From creators to curators, Augmented connected workforce, AI Ways of Work

Use Cases

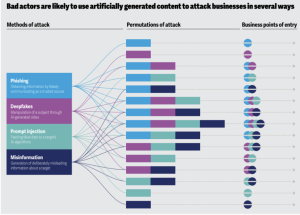

AI Wary: Upstart is a fintech firm that employs AI for credit scoring. They use machine learning algorithms to evaluate borrower risk based on factors beyond traditional credit history, including education, employment, and income. Upstart’s AI-powered underwriting model considers a wide range of data points to assess creditworthiness and determine loan eligibility.

Growing regulatory & Privacy Demand: Stanford Internet Observatory, a cross-disciplinary lab for the study of abuse in IT, works on different perspectives including trust and safety, information integrity (including misinformation), emerging tech (including AI) and policy.

Sub-Trend Sources

Customer Trust: Forrester Predictions

AI Wary: Ford Trends, Economist Ten Business Trends

Responsible AI: Cisco Trends, Kantar's Media Trends, Future Today Institute

Marketers become privacy champs: Forrester Predictions

GenAI Regulation: Delloite TMT Predictions, Forrester Tech Predictions Europe

Growin' regulatory & privacy demands: MC Blogs IT Trends, MIT Strategy Summit Report, Future Today Institute

AI TRISM: Gartner Strategic Trends, PWC AI Trends, Future Today Institute

GenAI Governance: BCG The Next Wave, Deloitte Tech Trends

AI Trust: Ford Trends (social), Future Today Institute, PWC AI Trends, Deloitte Tech Trends, Finance & Development

What to Read Next

All Things Data

The increasing complexity and scale of data within modern enterprises lead into a shift in data management strategies, catalyzing a trend towards more dynamic, integrated, and technologically advanced approaches. This[...]

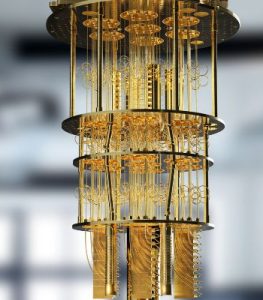

Quantum Computing

Latest Advances Although Quantum Computing was already present in last year’s Digital Trends, in 2024, the race to develop viable quantum systems is intensifying, with tech giants like IBM, Google,[...]

Eco Tech

The “Eco Tech” trend reflects the growing integration of technology with environmental sustainability efforts. Among the forefront technologies is carbon capture. Industries such as cement and steel are increasingly adopting[...]

What to Read Next

All Things Data

The increasing complexity and scale of data within modern enterprises lead into a shift in data management strategies, catalyzing a trend towards more dynamic, integrated,[...]

Quantum Computing

Latest Advances Although Quantum Computing was already present in last year’s Digital Trends, in 2024, the race to develop viable quantum systems is intensifying, with[...]

Eco Tech

The “Eco Tech” trend reflects the growing integration of technology with environmental sustainability efforts. Among the forefront technologies is carbon capture. Industries such as cement[...]