Ambivalent Reality

Key Takeaway:

As generative AI fuels a surge in deepfakes, misinformation, and scams, digital trust is eroding. According to PwC, 67% of security executives cite AI as expanding their attack surface. Consumers increasingly question what’s real online, affecting commerce and engagement. Governments are responding with regulation, but gaps persist. Transparency and AI explainability are now essential, particularly in areas like children’s digital safety. As ambivalence toward digital content rises, brands must offer verifiable, human-centered experiences. Those that prioritize trust, authenticity, and accountability will shape the next era of consumer connection in a reality where authenticity is no longer a given.

Trend Type: Social & Business

Sub-trends: Ambivalent Reality, LLMs' Explainability, Children's Digital Harm

The Erosion of Digital Trust

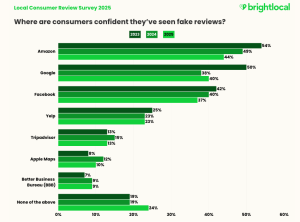

As generative AI becomes more embedded in everyday life, trust in digital spaces is eroding. The ease with which content can be generated and manipulated has unleashed a wave of scams, deepfakes, fake reviews, and misinformation. According to PwC’s 2025 Global Digital Trust Insights report, 67% of security executives say that generative AI has expanded their attack surface over the past year, citing phishing, deepfakes, and data manipulation as growing concerns. These findings point to a widening credibility gap online—one that is now directly impacting digital commerce. Shoppers hesitate before making purchases, users question whether websites are real, and online reviews are met with growing skepticism. A recent survey by Brightlocal in the U.S showed that 75% of consumers say they’re concerned about fake online reviews. Moreover, around half of the survey respondents claim that they’re confident they’ve seen fake reviews on major review sites Amazon (44%), Google (40%), and Facebook (37%). Because they’re so worried about this fake content, they’re taking steps themselves to verify the legitimacy of an online store or product before making a purchase. Interestingly it appears that less consumers are confident they’ve spotted a fake review in the last year (24% vs. 19% in 2024).

Source: Brightlocal - Local Consumer Review Survey 2025

This trust vacuum is fueling changes in behavior. Traditional search is losing ground as users struggle with SEO manipulation and low-quality AI-generated content. Instead, people are turning to peer-validated platforms like Reddit, where recommendations feel more human and less curated by algorithms. The rise of “malvertising”—malicious ads that trick users merely by loading a webpage—adds to the risk landscape. Meanwhile, hyper-personalized scams, made possible by AI, are getting harder to detect, targeting users through deepfakes, impersonation, and emotionally manipulative content. In this chaotic environment, trust has become a strategic asset.

Building Trust: Regulation, Explainability, and Safety in AI

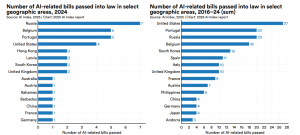

As digital trust erodes, calls for systemic change are intensifying. Governments around the world are accelerating efforts to regulate AI, with sharp differences emerging across regions. The EU’s AI Act and Digital Services Act stand as the world’s most comprehensive regulatory frameworks, establishing sweeping obligations for AI transparency, risk management, and the banning of certain harmful applications. In contrast, the U.S. maintains a fragmented approach, where regulatory momentum is more visible at the state level: in 2024 alone, U.S. states introduced over 130 AI-related bills.

A broader view from the AI Index 2025 shows that 39 countries have passed at least one AI-related law between 2016 and 2024. Russia, Belgium, and Portugal led the number of new AI bills in 2024, while the U.S. remains the overall leader in cumulative AI legislation.

Source: Stanford HAI 2025 - Number of AI-related regulations passed

Explainability and digital harm are also emerging as critical regulatory fronts. As the opacity of large models continues to fuel mistrust, enterprises are prioritizing model validation, monitoring, and mechanistic interpretability. This is particularly urgent in sectors like finance and healthcare, where AI decisions carry significant risks. The AI Index 2025 highlights that 51% of organizations report incidents of unintended AI decision-making, and 47% cite bias issues.

Beyond compliance, investment is becoming a competitive differentiator. Governments like Canada, India, and France are coupling regulatory action with massive AI investments—$2.4 billion, $1.25 billion, and €109 billion respectively—raising the stakes for companies to align not just with national laws but with evolving global standards of responsible AI.

In this shifting landscape, businesses that proactively address regulatory risks, embrace explainability, and invest in user-centric AI design will be better positioned to sustain trust and competitive advantage.

Toward a Trust-Centered Digital Ecosystem

The rising tide of ambivalence—simultaneous engagement with and suspicion toward digital content—requires a new philosophy, where transparency is the key. Consumers want to know when they are interacting with AI, what data is being used, and how authenticity is guaranteed. Blockchain verification, QR code traceability, and content provenance tools are already being explored to “decontaminate” digital spaces. Meanwhile, new governance models must balance innovation with user safety, especially as AI tools gain power to generate increasingly indistinguishable media.

Nowhere is this more urgent than in the realm of children’s digital safety. Parents are increasingly alarmed by their kids’ exposure to harmful content, from algorithm-driven radicalization to AI-generated images used in deepfake bullying. According to Dentsu Media’s 2025 Media Trends report, 56.5% of young adults (18–24) say social media significantly affects their self-identity, compared to just 23.3% of those over 55. In response, parents are pushing for stronger controls, with 64.7% globally agreeing on limiting children’s social media use. Grassroots movements advocate banning smartphones for under-14s, while governments in the UK, France, China, and the U.S. are implementing age-specific safety laws such as the Kids Online Safety Act (KOSA). For brands, this marks a shift: the future of youth marketing may lie less in unfiltered digital access and more in “semi-digital” touchpoints like smartwatches, parental dashboards, and controlled platforms.

Use Cases

Ambivalent Reality: Glaze is a system designed to protect human artists by disrupting style mimicry. At a high level, Glaze works by understanding the AI models that are training on human art, and using machine learning algorithms, computing a set of minimal changes to artworks, such that it appears unchanged to human eyes, but appears to AI models like a dramatically different art style.

Ambivalent Reality: As content provenance tools are being explored to “decontaminate” digital spaces, the Coalition for Content Provenance and Authenticity (C2PA) is addressing the prevalence of misleading information online. It does it through the development of technical standards for certifying the source and history (or provenance) of media content. Check this video to understand more.

Ambivalent Reality: VerificAudio is a fact-checking app developed by PRISA Media to verify the authenticity of audio and detect deepfakes created with synthetic voices. By integrating two custom-trained AI models that evaluate Spanish-language audio files, the initiative aims to promote a fact-checking culture that involves not only journalists but also their audiences. Users can submit audio files for verification, empowering the public to join journalists in the fight against disinformation.

Use Cases

Sub-Trend Sources

Ambivalent Reality: Accenture Life Trends, Economist Tech Trends, Stanford HAI Index Report

LLMs' Explainability: BBVA Spark Tech Trends

Children's Digital Harm: BBVA Spark Tech Trends

What to Read Next

Good Enough Life

From Aspirational To Sufficient Young people are abandoning broken milestones and rewriting the rules of adulthood. According to Deloitte’s 2024 Global Millennial Survey, 64% of Gen Z and Millennials believe[...]

Search Disruption

From Search to Conversation Search is no longer just about Google. In 2024, platforms like TikTok, Reddit, and ChatGPT began redefining how people seek information—especially in areas like health and[...]

Balancing Reality

Rebalancing Digital and Physical Life This trend has been unfolding since the Covid era, when people rediscovered nature, in-person socialization, and tangible connections. In sum, as digital saturation grows, people[...]

What to Read Next

Good Enough Life

From Aspirational To Sufficient Young people are abandoning broken milestones and rewriting the rules of adulthood. According to Deloitte’s 2024 Global Millennial Survey, 64% of[...]

Search Disruption

From Search to Conversation Search is no longer just about Google. In 2024, platforms like TikTok, Reddit, and ChatGPT began redefining how people seek information—especially[...]

Balancing Reality

Rebalancing Digital and Physical Life This trend has been unfolding since the Covid era, when people rediscovered nature, in-person socialization, and tangible connections. In sum,[...]