Flexible AI

Key Takeaway:

AI infrastructure is becoming more modular, as enterprises blend data center types (cloud and on-premises) and edge environments (laptops, smartphones) to meet growing demands for speed, control, and efficiency. Hybrid models are now standard, while smaller, task-specific AI systems are increasingly deployed closer to the user. This shift toward on-device intelligence reflects a broader pivot to adaptable, privacy-conscious systems that optimize performance and cost across increasingly dynamic and distributed digital architectures.

Trend Type: Technology

Sub-trends: Hybrid Cloud Models, Closed LLMs Vs Open Small Models, AI: Different Needs, Different Solutions, On-device GenAI

The Rise of Specialized AI Models

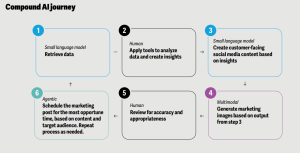

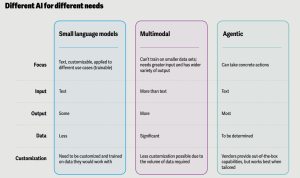

As organizations adopt hybrid models to balance flexibility and control, they are also rethinking how AI is deployed across environments. While foundational models built by large tech firms remain powerful, many enterprises are shifting focus to smaller, more efficient models better suited to specific tasks. According to Thoughtworks and MIT, small language models (SLMs) with fewer parameters—typically between 3.5 and 10 billion—can match or outperform larger models in focused use cases. These models require significantly less compute, can be deployed on edge devices, and offer advantages in latency, privacy, and cost.

Source: Delloite 2025 - Compound AI Journey

Interest in SLMs is not just technical—it’s strategic. With growing concerns about data security and cloud dependency, companies are increasingly experimenting with models that run locally. Google’s Gemini Nano and Microsoft’s Phi-3 series are leading examples of this new generation. These smaller, lighter models can support use cases from edge computing to enterprise document retrieval without requiring massive cloud infrastructure.

From the Cloud to the Device

The hybrid cloud conversation is now expanding beyond data centers and into people’s hands. On-device generative AI is poised to change how users interact with their most personal technologies—smartphones and laptops. Once considered incremental, device innovation is being reenergized by the integration of advanced chips and next-generation operating systems designed specifically for local AI processing. According to Deloitte, by the end of 2025, over 30% of smartphones shipped globally will be GenAI-enabled, with global smartphone shipment growth projected to lift to 7%, up from 5% in 2024. Early adopters and developers are already embracing this shift, upgrading devices for smarter, real-time AI capabilities.

Edge-based AI isn’t just for mobile. Laptops and other hardware are also evolving, with companies integrating Neural Processing Units (NPUs) and purpose-built AI tools directly into their systems. As Trendhunter notes, professionals increasingly rely on daily AI services, and hardware is catching up to meet that demand. NPUs are enabling everything from on-device transcription to smart automation—without sending data to the cloud.

Thoughtworks highlights frameworks like MLX and Chatty that allow large language models to run directly in web browsers and on edge devices. These developments enable real-time image or video processing, ultra-low latency interactions, and increased privacy through local inference. As the LLM landscape evolves, the industry is embracing the notion that “different horses for different courses” will define the next wave of AI adoption. General-purpose models still have value for broad applications, but smaller, task-specific models—and hybrid architectures that support them—are reshaping what scalable, secure AI looks like across devices and infrastructures.

Hybrid by Design

Hybrid cloud models are emerging as the foundational architecture for the next era of enterprise computing. By blending the speed, reliability, and security of on-premises systems with the scalability and cost-efficiency of public cloud platforms, businesses are rethinking how they manage workloads, secure data, and scale digital operations. These models not only support compliance and minimize downtime but also improve resilience in the face of evolving cybersecurity threats.

Cloud computing remains central to IT strategies, but scrutiny around cost and operational efficiency is intensifying. According to Forbes Tech Predictions, CIOs and CTOs are now under pressure to rationalize their public cloud spending, prioritizing sound architectures over expansive deployments. The outages experienced by major providers like AWS and Azure last summer have only accelerated the move toward multi-cloud environments, with companies increasingly distributing workloads across a mix of public, private, and edge infrastructure. This shift is less about cloud abandonment and more about resilience—ensuring systems can scale and recover without being locked into a single vendor.

Forrester echoes this shift, noting a resurgence in on-premises computing as businesses confront issues of sovereignty, cost control, and regulatory compliance. Most large enterprises already operate in hybrid mode and are now expanding their private cloud capabilities to manage sensitive workloads such as AI model pretraining, RAG (Retrieval-Augmented Generation, the process of optimizing the output of a large language model with proprietary information) integration, and AI agent automation. However, changes in pricing and bundling by dominant players like VMware may limit expansion, prompting interest in hyperconverged solutions like Nutanix or open-source options like OpenStack. Forrester predicts major public cloud providers will continue to invest in private cloud offerings, though private and public growth will occur in parallel rather than in competition.

Source: Delloite 2025 - Different AI for Different Needs

Use Cases

Closed LLMs Vs Open Small Models: Phi-3 is a family of small, open AI models from Microsoft. They deliver top-tier performance at low cost and with fast response times. The Phi-3 Mini, Small, Medium, and Vision models are especially efficient—offering capabilities comparable to much larger models thanks to advanced scaling techniques and carefully selected training data.

On-device GenAI: Adobe’s SlimLM is bringing powerful document processing directly to your smartphone—no internet required. Unlike bulky cloud-dependent models, SlimLM is compact, efficient, and performs complex tasks like summarization and question answering right on your device.

Small LLMs: Gemma 3 is a new family of AI models designed to run anywhere, from phones to servers. It offers better performance, supports multimodal and multilingual tasks, and gives users flexible options with pre-trained and instruction-tuned versions.

Use Cases

Sub-Trend Sources

Hybrid Cloud Models: Accenture Tech Vision, Forbes Tech Predictions, Forrester Predictions: Technology and Security, Deloitte Tech Trends

Closed LLMs Vs Open Small Models: Accenture Tech Vision, Deloitte Tech Trends

AI: Different Needs, Different Solutions: Accenture Tech Vision, Deloitte Tech Trends

On-device GenAI: Accenture Tech Vision, Deloitte Tech Trends

What to Read Next

Embodied AI

Embodied AI and Robotics The integration of foundation models into robotics is revolutionizing how machines interact with the world, enabling them to reason, adapt, and operate autonomously in dynamic environments.[...]

Energy Challenge

The Collision of AI and Energy Demand The rise of generative AI is not only transforming digital landscapes—it’s also reshaping the global energy equation. According to Deloitte, the rapid expansion[...]

Scientific AI

AI Meets Scientific Discovery A new frontier is opening where AI is not merely a support tool for scientific research—it’s becoming a catalyst for fundamental breakthroughs. This shift was symbolically[...]

What to Read Next

Embodied AI

Embodied AI and Robotics The integration of foundation models into robotics is revolutionizing how machines interact with the world, enabling them to reason, adapt, and[...]

Energy Challenge

The Collision of AI and Energy Demand The rise of generative AI is not only transforming digital landscapes—it’s also reshaping the global energy equation. According[...]

Scientific AI

AI Meets Scientific Discovery A new frontier is opening where AI is not merely a support tool for scientific research—it’s becoming a catalyst for fundamental[...]